bagging machine learning algorithm

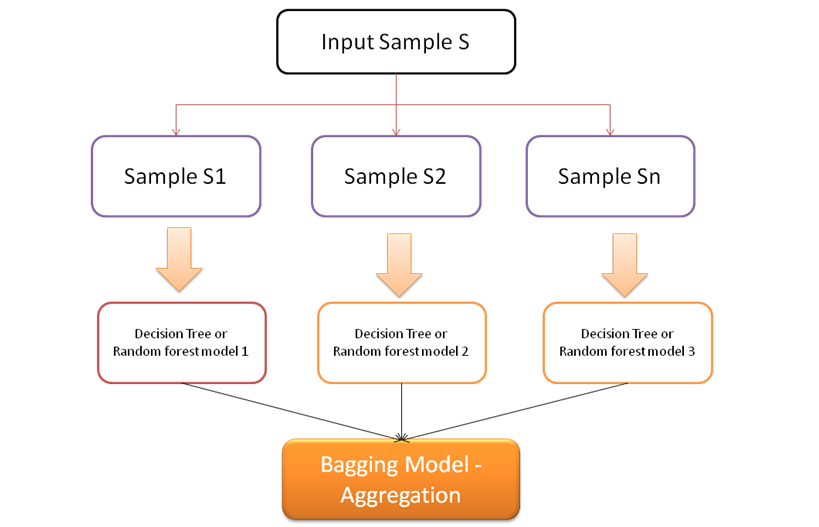

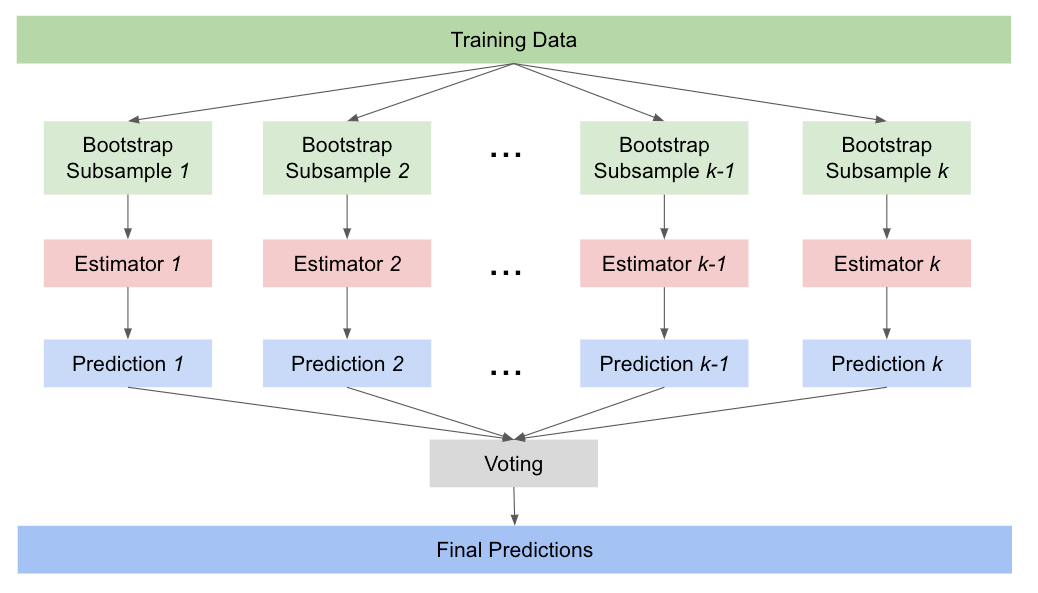

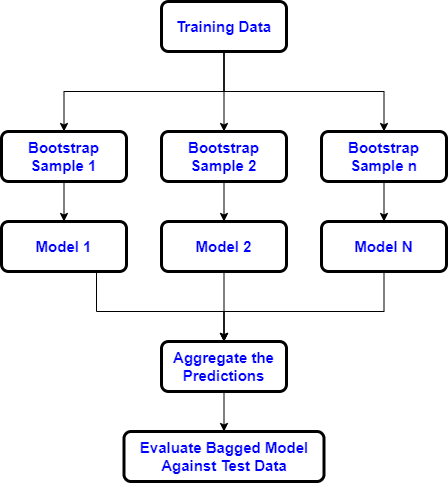

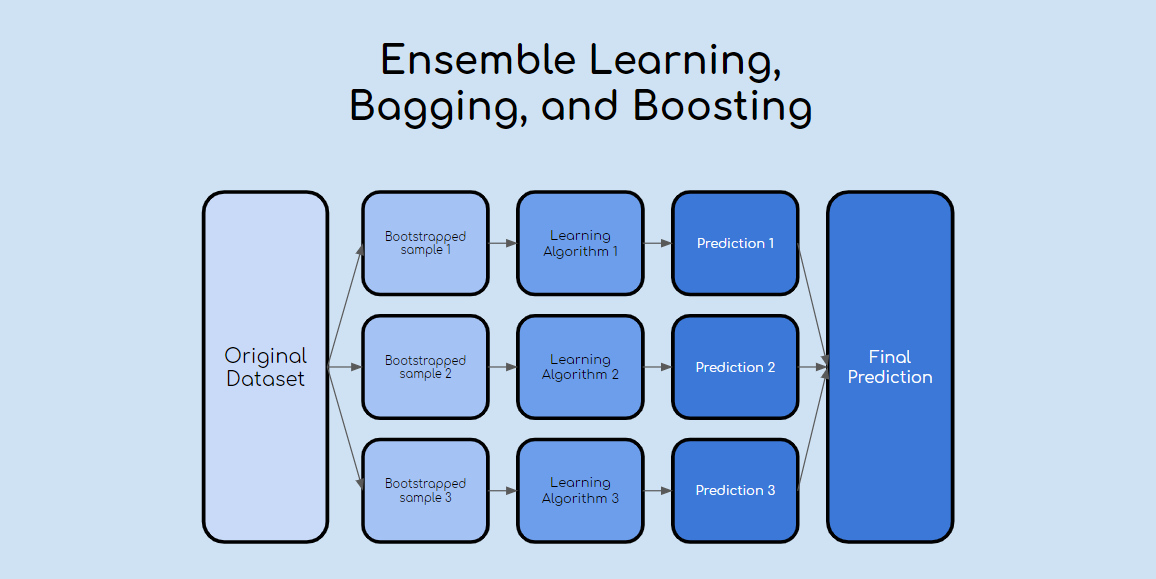

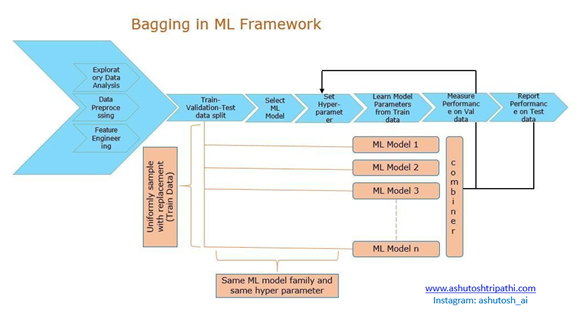

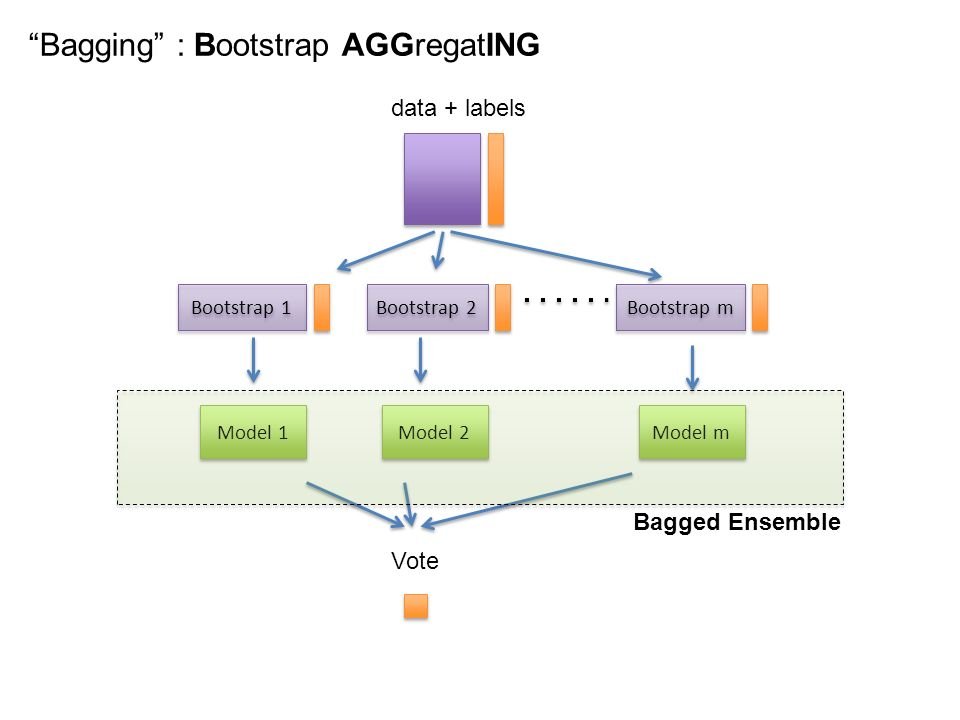

Web Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset. Algorithm for ensemble learning.

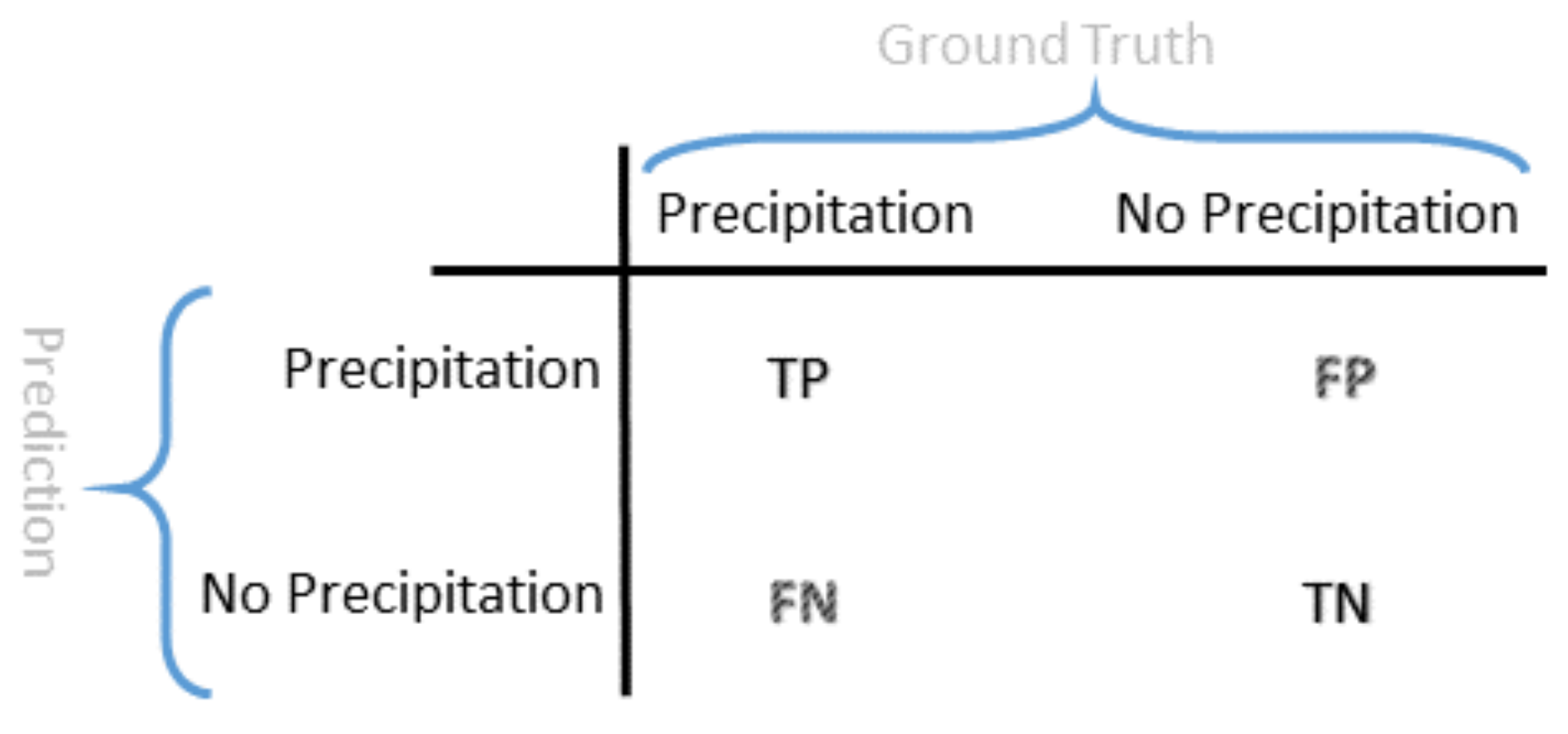

Informatics Free Full Text Bagging Machine Learning Algorithms A Generic Computing Framework Based On Machine Learning Methods For Regional Rainfall Forecasting In Upstate New York

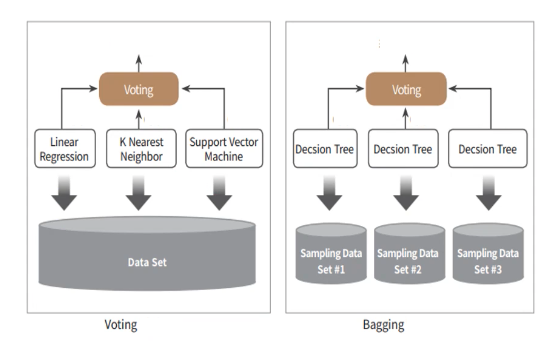

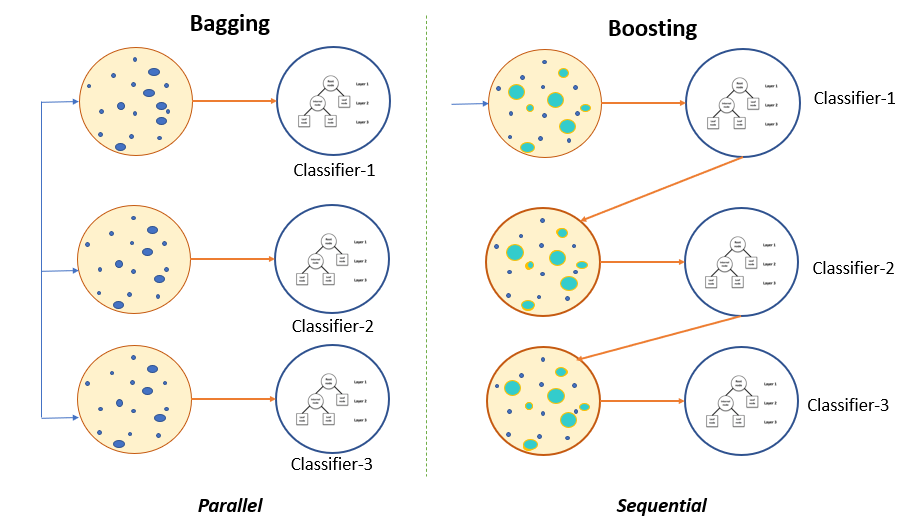

Web Both bagging and boosting form the most prominent ensemble techniques.

. It is a model averaging technique that. So before understanding Bagging and Boosting lets have an idea of what is ensemble Learning. Bagging aims to improve the accuracy and.

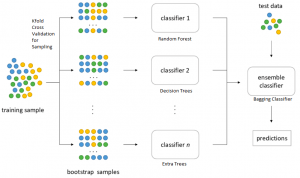

Web Bagging is an ensemble machine learning algorithm that combines the predictions from many decision trees. Web Ensemble learning is the same way. We can either use a single algorithm or combine multiple algorithms in building a machine learning model.

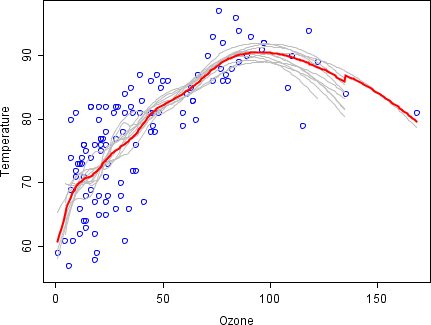

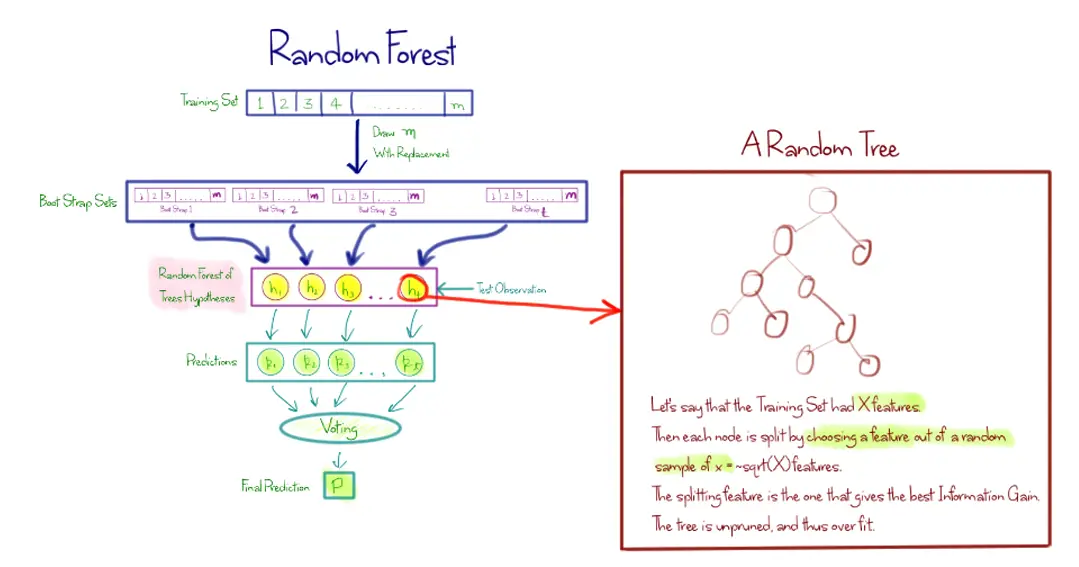

Web Bagging algorithms in Python. Lets assume weve a sample. Web Bagging is that the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees.

A Random Forest B Regression C. Web Bagging and Boosting are the two popular Ensemble Methods. Web The full designation of bagging is bootstrap aggregation approach belonging to the group of machine learning ensemble meta algorithms Kadavi et al.

Web They can help improve algorithm accuracy or make a model more robust. Which of the following is a widely used and effective machine learning algorithm based on the idea of bagging. Ensemble learning is the process of combining numerous individual learners to produce a better learner.

Two examples of this are boosting and bagging. Web Machine learning is a sub-part of Artificial Intelligence that gives power to models to learn on their own by using algorithms and models without being explicitly. Boosting and Bagging are must.

Web Bootstrap Aggregation bagging is a ensembling method that attempts to resolve overfitting for classification or regression problems. Web This study aims to develop an objective and useful automatic scoring model for open-ended questions using machine learning algorithms. Web Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of.

An ensemble method is a machine learning platform that helps multiple models in. Boosting and bagging are topics that. Within the scope of this aim an.

Two examples of this is boosting and bagging. Web In statistics and machine learning the notion of bagging is significant because it prevents data from becoming overfit. Web They can help improve algorithm accuracy or improve the robustness of a model.

Ensemble Methods Explained In Plain English Bagging By Claudia Ng Towards Ai

Bagging Boosting And Stacking In Machine Learning Drops Of Ai

Ensemble Learning Bagging Boosting Stacking Kaggle

A Guide To Ensemble Learning Ensemble Learning Need Benefits And By Nikita Sharma Heartbeat

Bootstrap Aggregating Wikipedia

Introduction To Bagging And Ensemble Methods Paperspace Blog

Ensemble Learning Bagging And Boosting Explained In 3 Minutes By Terence Shin Towards Data Science

Ensemble Learning Voting And Bagging

What Is The Difference Between Bagging And Boosting Quantdare

Bagging Algorithms In Python Engineering Education Enged Program Section

What Is Bagging In Ensemble Learning Data Science Duniya

Ensemble Learning Bagging Boosting By Fernando Lopez Towards Data Science

Bagging And Random Forest Ensemble Algorithms For Machine Learning

Ensemble Classifier Data Mining Geeksforgeeks

Paige Bailey Blacklivesmatter On Twitter B Is For Bootstrapped Aggregation Bagging This Is An Ensemble Meta Algorithm Designed To Improve The Stability And Accuracy Of Machine Learning Algorithms Used In Statistical Classification

Bagging Classifier Python Code Example Data Analytics

A Short Introduction Bagging And Random Forest Algorithms Blockchain And Cloud

What Is The Difference Between Bagging And Boosting Quantdare